OPTIMICA Compiler Toolkit🔗

The OPTIMICA Compiler Toolkit (OCT) is the calculation engine (both compiler and solver) used by Modelon Impact. It comes with a Modelica compiler with capabilities beyond dynamic simulation by offering unique features for optimization and steady-state computations.

Main features🔗

- A Modelica compiler compliant with the Modelica language specification (MLS) 3.4 supporting both Modelica Standard Library (MSL) 3.2.3-build3 as well as the Modelica Standard Library version 4.0.0 with the following exception: The language elements described in the MLS, chapter 16- Synchronous Language Elements and chapter 17 - State Machines, are not supported.

- The compiler generates Functional Mock-up Units (FMUs), including Model Exchange and Co-simulation as well as version 1.0 and 2.0 of the FMI standard.

- Dynamic simulation algorithms for integration of large-scale and stiff systems. Algorithms include CVode and Radau.

- Dynamic optimization algorithms based on collocation for solving optimal control and estimation problems. Dynamic optimization problems are encoded in Optimica, an extension to Modelica.

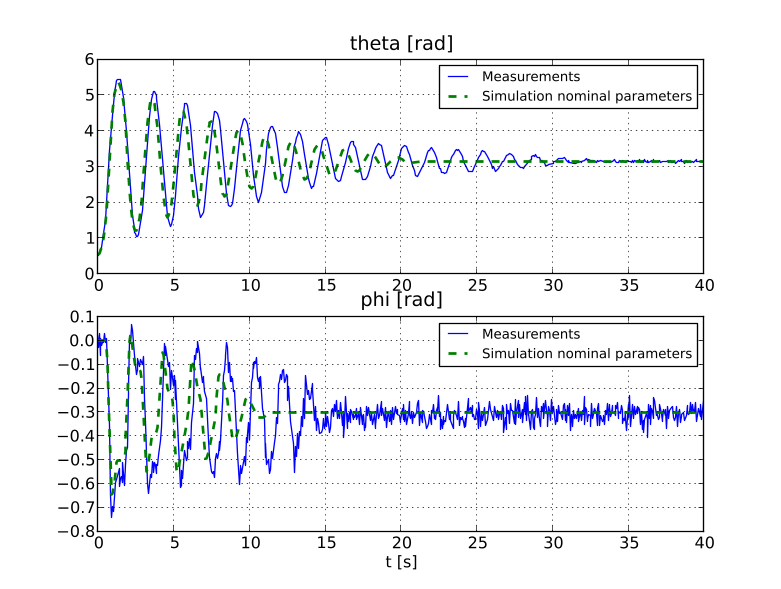

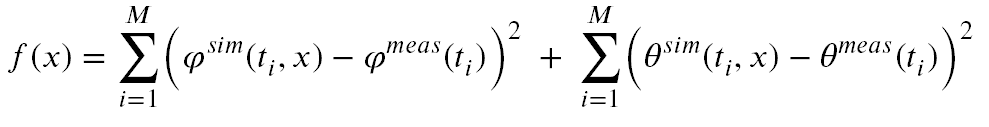

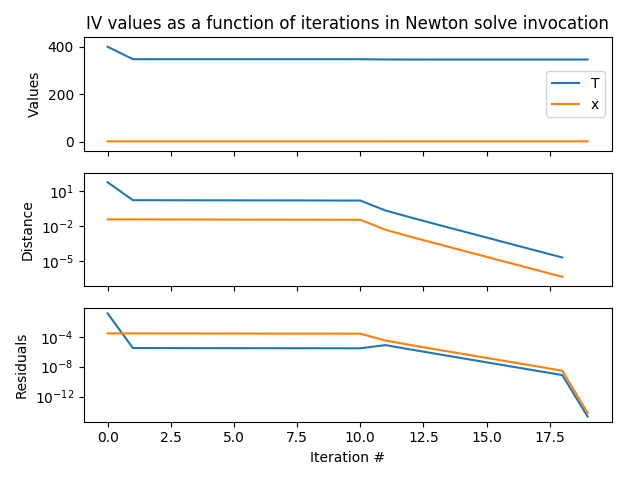

- A derivative-free model calibration algorithm to estimate model parameters based on measurement data.

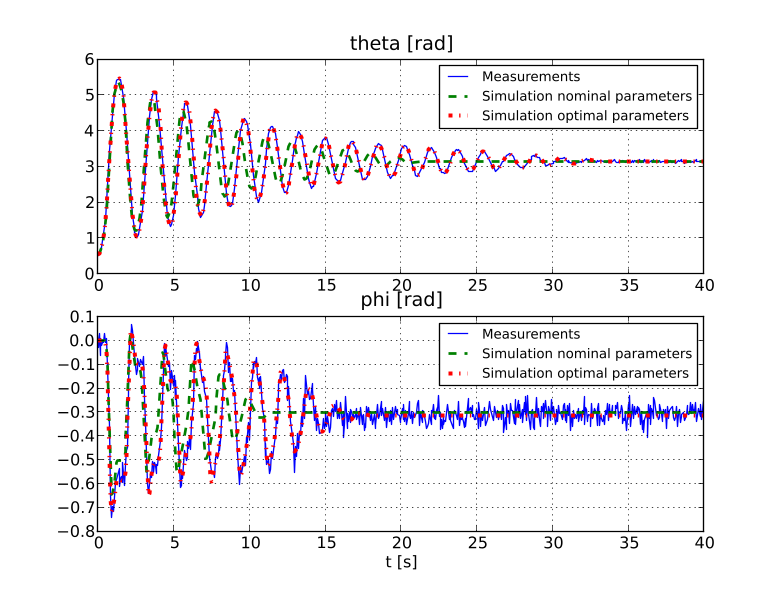

- A non-linear solver for solving large-scale systems of equations arising, e.g., in steady-state applications. Efficient and robust steady-state problem formulation is enable by Physics Based Solving, which enables user specified selection of residuals and iteration variables.

- Support for encrypted and licensed Modelica libraries.

- Support for state-of-the-art numerical algorithms for dynamic optimization, notably the HSL solver MA57 which provides improved robustness and performance.

- A compiler API is available to extract information and manipulate, e.g., packages, models, parameters and annotations, from Modelica libraries.

- Scripting APIs in Python is available to script automation of compilation, simulation and optimization of Modelica and FMI models.

- Support for Python 3.9 on Linux and Python 3.7 on Windows.

Working with Models in Python🔗

Introduction to models🔗

Modelica and Optimica models can be compiled and loaded as model objects using the OCT Python interface. These model objects can be used for both simulation and optimization purposes. This chapter will cover how to compile Modelica and Optimica models, set compiler options, load the compiled model in a Python model object and use the model object to perform model manipulations such as setting and getting parameters.

The different model objects in OCT🔗

There are several different kinds of model objects that can be created with OCT: FMUModel(ME/CS)(1/2) (i.e. FMUModelME1, FMUModelCS1, FMUModelME2, and FMUModelCS2) and OptimizationProblem. The FMUModel(ME/CS)(1/2) is created by loading an FMU (Functional Mock-up Unit), which is a compressed file compliant with the FMI (Functional Mock-up Interface) standard. The OptimizationProblem is created by transferring an optimization problem into the CasADi-based optimization tool chain.

FMUs are created by compiling Modelica models with OCT, or any other tool supporting FMU export. OCT supports export of FMUs for Model Exchange (FMU-ME) and FMUs for Co-Simulation (FMU-CS), version 1.0 and 2.0. Import of FMU-CS version 2.0 is also supported. Generated FMUs can be loaded in an FMUModel(ME/CS) (1/2) object in Python and then be used for simulation purposes. Optimica models can not be compiled into FMUs.

OptimizationProblem objects for CasADi optimization do not currently have a corresponding file format, but are transferred directly from the OCT compiler, based on Modelica and Optimica models. They contain a symbolic representation of the optimization problem, which is used with the automatic differentiation tool CasADi for optimization purposes. Read more about CasADi and how an OptimizationProblem object can be used for optimization in Simulations of FMUs in Python.

Compilation🔗

This section brings up how to compile a model for an FMU-ME / FMU-CS. Compiling a model to an FMU-ME / FMU-CS will be demonstrated in Simple FMU-ME compilation example and Simple FMU-CS compilation example respectively.

For more advanced usage of the compiler functions, there are compiler options and arguments which can be modified. These will be explained in Compiler settings.

Simple FMU-ME compilation example🔗

The following steps compile a model to an FMU-ME version 2.0:

- Import the OCT compiler function compile_fmu from the package pymodelica.

- Specify the model and model file.

- Perform the compilation.

This is demonstrated in the following code example:

#Import the compiler function

from pymodelica import compile_fmu

# Specify Modelica model and model file (.mo or .mop)

model_name = 'myPackage.myModel'

mo_file = 'myModelFile.mo'

# Compile the model and save the return argument, which is the file name of the FMU

my_fmu = compile_fmu(model_name, mo_file)

There is a compiler argument target that controls whether the model will be exported as an FMU-ME or FMU-CS. The default is to compile an FMU-ME, so target does not need to be set in this example. The compiler argument version specifies if the model should be exported as an FMU 1.0 or 2.0. As the default is to compile an FMU 2.0, version does not need to be set either in this example. To compile an FMU 1.0, version should be set to '1.0'.

Once compilation has completed successfully, an FMU-ME 2.0 will have been created on the file system. The FMU is essentially a compressed file archive containing the files created during compilation that are needed when instantiating a model object. Return argument for compile_fmu is the file path of the FMU that has just been created, this will be useful later when we want to create model objects. More about the FMU and loading models can be found in Loading models.

In the above example, the model is compiled using default arguments and compiler options - the only arguments set are the model class and file name. However, compile_fmu has several other named arguments which can be modified. The different arguments, their default values and interpretation will be explained in Compiler settings.

Simple FMU-CS compilation example🔗

The following steps compiles a model to an FMU-CS version 2.0:

-

Import the OCT compiler function compile_fmu from the package pymodelica.

-

Specify the model and model file.

-

Set the argument target = 'cs'

-

Perform the compilation.

This is demonstrated in the following code example:

# Import the compiler function

from pymodelica import compile_fmu

# Specify Modelica model and model file (.mo or .mop)

model_name = 'myPackage.myModel'

mo_file = 'myModelFile.mo'

# Compile the model and save the return argument, which is the file name of the FMU

my_fmu = compile_fmu(model_name, mo_file, target='cs')

Compiling from libraries🔗

The model to be compiled might not be in a standalone .mo file, but rather part of a library consisting of a directory structure containing several Modelica files. In this case, the file within the library that contains the model should not be given on the command line. Instead, the entire library should to added to the list of libraries that the compiler searches for classes in. This can be done in several ways (here library directory refers to the top directory of the library, which should have the same name as the top package in the library):

• Giving the path to the library directory in the file_name argument of the compilation function. This allows adding a specific library to the search list (as opposed to adding all libraries in a specific directory).

• Set the directory containing a library directory via the keyword argument named modelicapath of the compilation function. This allows for adding several libraries to the search list. For example if modelicapath=C : \MyLibs, then the compiler sets MODELICAPATH to C : \MyLibs and all libraries within are added to the search list during compilation.

The Modelica Standard Library (MSL) that is included in the installation is loaded by default, when starting the OCT Python shell . The version of MSL that is loaded is added on the compiler option named msl_version or any existing uses annotations within the model being compiled.

The Python code example below demonstrates these methods:

# Import the compiler function

from pymodelica import compile_fmu

# Compile an example model from the MSL

fmu1 = compile_fmu('Modelica.Mechanics.Rotational.Examples.First')

# Compile an example model utilizing the modelica path keyword argument assuming

# the library MyLibrary is located in C:/MyLibs, i.e. C:/MyLibs/MyLibrary exists

fmu2 = compile_fmu('MyLibrary.MyModel', 'C:/MyLibs/MyLibrary')

Compiler settings🔗

The compiler function arguments can be listed with the interactive help in Python. The arguments are explained in the corresponding Python docstring which is visualized with the interactive help. This is demonstrated for compile_fmu below. The docstring for any other Python function for can be displayed in the same way.

compile_fmu arguments🔗

The compile_fmu arguments can be listed with the interactive help.

# Display the docstring for compile_fmu with the Python command 'help'

from pymodelica import compile_fmu

help(compile_fmu)

Help on function compile_fmu in module pymodelica.compiler:

compile_fmu(class_name, file_name = [], compiler = 'auto',

target = 'me', version = '2.0', platform = 'auto',

compiler_options = {}, compile_to = '.',

compiler_log_level = 'warning',

modelicapath = ' ', separate_process = True, jvm_args = '') :

Compile a Modelica model to an FMU.

A model class name must be passed, all other arguments have default values.

The different scenarios are:

* Only class_name is passed:

- Class is assumed to be in MODELICAPATH.

* class_name and file_name is passed:

- file_name can be a single path as a string or a list of paths

(strings). The paths can be file or library paths.

- Default compiler setting is 'auto' which means that the appropriate

compiler will be selected based on model file ending, i.e.

ModelicaCompiler if a .mo file and OptimicaCompiler if a .mop file is

found in file_name list.

The compiler target is 'me' by default which means that the shared

file contains the FMI for Model Exchange API. Setting this parameter to

'cs' will generate an FMU containing the FMI for Co-Simulation API.

Parameters::

class_name --

The name of the model class.

file_name --

A path (string) or paths (list of strings) to

model files and/or libraries. If file does not exist,an exception of

type pymodelica.compiler_exceptions.PyModelicaFileError is raised.

Default: Empty list.

compiler --

The compiler used to compile the model. The different options are:

- 'auto': the compiler is selected automatically depending on file ending

- 'modelica': the ModelicaCompiler is used

- 'optimica': the OptimicaCompiler is used

Default: 'auto'

target --

Compiler target. Possible values are 'me', 'cs' or 'me+cs'.

Default: 'me'

version --

The FMI version. Valid options are '1.0' and '2.0'.

Default: '2.0'

platform --

Set platform, controls whether a 32 or 64 bit FMU is generated.

This option is only available for Windows.

Valid options are :

- 'auto': platform is selected automatically.

This is the only valid option for linux & darwin.

- 'win32': generate a 32 bit FMU

- 'win64': generate a 64 bit FMU

Default: 'auto'

compiler_options --

Options for the compiler.

Default: Empty dict.

compile_to --

Specify target file or directory. If file, any intermediate directories

will be created if they don't exist. Furthermore, the modelica model

will be renamed to this name. If directory, the path given

must exist and the model will keep its original name.

Default: Current directory.

compiler_log_level --

Set the logging for the compiler. Takes a comma separated list with

log outputs. Log outputs start with a flag :'warning'/'w',

'error'/'e', 'verbose'/'v', 'info'/'i' or 'debug'/'d'.

The log can be written to file

by appended flag with a colon and file name.

Example: compiler_log_level='d:debug.txt', sets the log level to debug

and writes the log to a file named 'debug.txt'

Default: 'warning'

separate_process --

Run the compilation of the model in a separate process.

Checks the environment variables (in this order):

1. SEPARATE_PROCESS_JVM

2. JAVA_HOME

to locate the Java installation to use.

For example (on Windows) this could be:

SEPARATE_PROCESS_JVM = C:\Program Files\Java\jdk1.6.0_37

Default: True

jvm_args --

String of arguments to be passed to the JVM when compiling in a

separate process.

Default: Empty string

Returns::

A compilation result, represents the name of the FMU which has been

created and a list of warnings that was raised.

Compiler options🔗

Compiler options can be modified using the compile_fmu argument compiler_options. This is shown in the example below.

# Compile with the compiler option 'enable_variable_scaling' set to True

# Import the compiler function

from pymodelica import compile_fmu

# Specify model and model file

model_name = 'myPackage.myModel'

mo_file = 'myModelFile.mo'

# Compile

my_fmu = compile_fmu(model_name, mo_file,

compiler_options={"enable_variable_scaling":True})

Loading models🔗

Compiled models, FMUs, are loaded in the OCT Python interface with the FMUModel(ME/CS) (1/2) class from the pyfmi module, while optimization problems for the CasADi-based optimization are transferred directly into the OptimizationProblem class from the pyjmi module. This will be demonstrated in Loading an FMU and Transferring an Optimization Problem. The model classes contain many methods with which models can be manipulated after instantiation. Among the most important methods are initialize and simulate, which are used when simulating. These are explained in Simulations of FMUs in Python and Dynamic Optimization in Python. For more information on how to use the OptimizationProblem for optimization purposes, see Dynamic Optimization in Python. The more basic methods for variable and parameter manipulation are explained in Changing model parameters.

Loading an FMU🔗

An FMU file can be loaded in OCT with the method load_fmu in the pyfmi module. The following short example demonstrates how to do this in a Python shell or script.

# Import load_fmu from pyfmi

from pyfmi import load_fmu

myModel = load_fmu('myFMU.fmu')

Transferring an Optimization Problem🔗

An optimization problem can be transferred directly from the compiler in OCT into the class OptimizationProblem in the pyjmi module. The transfer is similar to the combined steps of compiling and then loading an FMU. The following short example demonstrates how to do this in a Python shell or script.

# Import transfer_optimization_problem

from pyjmi import transfer_optimization_problem

# Specify Modelica model and model file

model_name = 'myPackage.myModel'

mo_file = 'myModelFile.mo'

# Compile the model, return argument is an OptimizationProblem

myModel = transfer_optimization_problem(model_name, mo_file)

Changing model parameters🔗

Model parameters can be altered with methods in the model classes once the model has been loaded. Some short examples in Setting and getting parameters will demonstrate this.

Setting and getting parameters🔗

The model parameters can be accessed via the model class interfaces. It is possible to set and get one specific parameter at a time or a whole list of parameters.

The following code example demonstrates how to get and set a specific parameter using an example FMU model from the pyjmi.examples package.

# Compile and load the model

from pymodelica import compile_fmu

from pyfmi import load_fmu

my_fmu = compile_fmu('RLC_Circuit','RLC_Circuit.mo')

rlc_circuit = load_fmu(my_fmu)

# Get the value of the parameter 'resistor.R' and save the result in a variable

'resistor_r'

resistor_r = rlc_circuit.get('resistor.R')

# Give 'resistor.R' a new value

resistor_r = 2.0

rlc_circuit.set('resistor.R', resistor_r)

# Create a list of parameters, get and save the corresponding values in a variable 'values'

vars = ['resistor.R', 'resistor.v', 'capacitor.C', 'capacitor.v']

values = rlc_circuit.get(vars)

# Change some of the values

values[0] = 3.0

values[3] = 1.0

rlc_circuit.set(vars, values)

Debugging models🔗

The OCT compilers can generate debugging information in order to facilitate localization of errors. There are three mechanisms for generating such diagnostics: dumping of debug information to the system output, generation of HTML code that can be viewed with a standard web browser or logs in XML format from the non-linear solver.

Compiler logging🔗

The amount of logging that should be output by the compiler can be set with the argument compiler_log_level to the compile-functions (compile_fmu and also transfer_optimization_problem). The available log levels are 'warning' (default), 'error', 'info', 'verbose' and 'debug' which can also be written as 'w', 'e', 'i', 'v' and 'd' respectively. The following example demonstrates setting the log level to 'info':

# Set compiler log level to 'info'

compile_fmu('myModel', 'myModels.mo', compiler_log_level='info')

The log is printed to the standard output, normally the terminal window from which the compiler is invoked.

The log can also be written to file by appending the log level flag with a colon and file name. This is shown in the following example:

# Set compiler log level to info and write the log to a file log.txt

compile_fmu('myModel', 'myModels.mo', compiler_log_level='i:log.txt')

It is possible to specify several log outputs by specifying a comma separated list. The following example writes log warnings and errors (log level 'warning' or 'w') to the standard output and a more verbose logging to file (log level 'info' or 'i'):

# Write warnings and errors to standard output and the log with log level info to log.txt

compile_fmu('myModel', 'myModels.mo', compiler_log_level= 'w,i:log.txt')

Runtime logging🔗

Runtime logging refers to logging of data during simulation, this section outlines some methods to retrieve simulation data from an FMU.

Setting log level🔗

Many events that occur inside of an FMU can generate log messages. The log messages from the runtime are saved

in a file with the default name

# Load model

model = load_fmu(fmu_name, log_file_name='MyLog.txt')

# Load model and set log level to 5

model = load_fmu(fmu_name, log_level=5)

# Change log level to 7

model.set_log_level(7)

# Load model (with default log level)

model = load_fmu(fmu_name)

# Set amount of logging produced to the most verbose

model.set('_log_level', 6)

# Change log level to 7 to be able to see everything that is being produced

model.set_log_level(7)

Interpreting logs from FMUs produced by OCT🔗

In OCT, information is logged in XML format, which ends up mixed with FMI Library output in the resulting log file. Example: (the following examples are based on the example pyjmi.examples.logger_example.)

1 ...

2 FMIL: module = FMICAPI, log level = 5: Calling fmiInitialize

3 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] <EquationSolve>Model equations evaluation invoked at<value name="t"> 0.0000000000000000E+00</value>

4 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] <BlockEventIterations>Starting block (local) event iteration at<value name="t"> 0.0000000000000000E+00</value>in<value name="block">0</value>

5 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] <vector name="ivs"> 0.0000000000000000E+00, 0.0000000000000000E+00, 0.0000000000000000E+00<vector>

6 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] <vector name="switches">0.0000000000000000E+00, 0.0000000000000000E+00, 0.0000000000000000E+00, 0.0000000000000000E+00</vector>

7 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] <vectorname="booleans"></vector>

8 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] <BlockIteration>Localiteration<value name="iter">1</value>at<value name="t"> 00000000000000000E+00</value>

9 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] <JacobianUpdated><value name="block">0</value>

10 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] <matrixname="jacobian">

11 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] -1.0000000000000000E+00, 4.0000000000000000E+00, 0.0000000000000000E+00;

12 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] -1.0000000000000000E+00, -1.0000000000000000E+00, -1.0000000000000000E+00;

13 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] -1.0000000000000000E+00, 1.0000000000000000E+00, -1.0000000000000000E+00;

14 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] </matrix>

15 FMIL: module = Model, log level = 4: [INFO][FMU status:OK] </JacobianUpdated>

16 ...

# Generate an XML file from the simulation log that

was generated by model.simulate()

model.extract_xml_log()

# Parse the entire XML log

log = pyfmi.common.log.parse_fmu_xml_log(log_file_name)

• Named children are accessed by indexing with a string: node['t'], or simply dot notation: node.t.

• Unnamed children are accessed as a list node.nodes, or by iterating over the node.

There is also a convenience function gather_solves to extract common information about equation solves in the log. This function collects nodes of certain types from the log and annotates some of them with additional named children. The following example is from pyjmi.examples.logger_example:

1 # Parse the entire XML log

2 log = pyfmi.common.log.parse_fmu_xml_log(log_file_name)

3 # Gather information pertaining to equation solves

4 solves = pyjmi.log.gather_solves(log)

5

6 print('Number of solver invocations:', len(solves))

7 print('Time of first solve:', solves[0].t)

8 print('Number of block solves in first solver invocation:', len(solves[0].block_solves)

9 print('Names of iteration variables in first block solve:',

solves[0].block_solves[0].variables))

10 print('Min bounds in first block solve:',

solves[0].block_solves[0].min)

11 print('Max bounds in first block solve:',

solves[0].block_solves[0].max)

12 print('Initial residual scaling in first block solve:',

solves[0].block_solves[0].initial_residual_scaling)

13 print('Number of iterations in first block solve:',

len(solves[0].block_solves[0].iterations)

14 print('\n')

15 print('First iteration in first block solve: ')

16 print(' Iteration variables:',

solves[0].block_solves[0].iterations[0].ivs)

17 print(' Scaled residuals:',

solves[0].block_solves[0].iterations[0].residuals)

18 print(' Jacobian:\n',

solves[0].block_solves[0].iterations[0].jacobian)

19 print(' Jacobian updated in iteration:',

solves[0].block_solves[0].iterations[0].jacobian_updated)

20 print(' Residual scaling factors:',

solves[0].block_solves[0].iterations[0].residual_scaling)

21 print(' Residual scaling factors_updated:',

solves[0].block_solves[0].iterations[0].residual_scaling_updated)

22 print(' Scaled residual norm:',

solves[0].block_solves[0].iterations[0].scaled_residual_norm)

Compiler Diagnostic Output🔗

By setting the compiler option generate_html_diagnostics to true, a number of HTML pages containing diagnostics are generated. The HTML files are generated in the directory Model_Name_diagnostics, where Model_Name is the name of the compiled model. As compared to the diagnostics generated by the compiler_log_level argument, the HTML diagnostics contains only the most important information, but it also provides a better overview. Opening the file Model_Name_diagnostics/index.html in a web browser, results in a page with information on number of variables, parameters and equations as well as other statistics about the model.

Note that some of the entries in Model_Name_diagnostics/index.html, including Problems, Flattened model, Connection sets, Transformed model, Alias sets, BLT diagnostics table, BLT for DAE System and BLT for Initialization System are links to sub pages containing additional information. For example, the BLT for DAE System page contains information about in which order the model equations are evaluated and which blocks are present after compilation.

Additionally there is a table view of the BLT. It can be found on the BLT diagnostics table page. It provides a graphical representation of the BLT. The BLT diagnostics table is only generated when the model have fewer equations than the limit specified by the option diagnostics_limit due to the size of the graph.

In the following section a more thorough description of the HTML diagnostics will be presented.

HTML diagnostics🔗

The compiler can generate diagnostic output in HTML format which can be viewed in, e.g, a web browser. The generation is enabled through the option generate_html_diagnostics and the diagnostic consists of several pages which will be presented in the sections below.

index.html

index.html is the index page or, i.e., the start page of the HTML diagnostics. It consists of links to the other diagnostic pages as well as statistics of the compiled model. Model before transformation summarizes model statistics of the flattened model. Model after transformation gives the statistics after the compiler has done its transformations to the model like, for example, alias elimination. Finally the number of unsolved equation blocks in DAE initialization system and system before and after tearing is applied is presented. Note that nested blocks are not visible in the equation block statistics.

errors.html

The page errors.html, which can be reached from Problems in the index page, lists all compiler errors and warnings that occurred during compilation.

flattened.html

In flattened.html, the flattened model, which the numbers in Model before transformation corresponds to, is presented. That includes a listing of all constants, parameters and variables in the model with their type_prefix, type_specifier, the possible array subscripts, and the fully qualified name. If the type_specifier is not a built-in type, the defined type will be presented at the end of the page like, e.g.,

type Modelica.Units.SI.MassFraction = Real(final quantity = "MassFraction",final unit = "1",min = 0,max = 1);

After all the components, the initial equations are presented followed by the equations in the order they are read in by the compiler. Note that the components are given by their fully qualified name. When functions are used in the model, the function description with its inputs, outputs and algorithm is given in the end of the page. Definitions of records used in the model can be found there as well.

Note that uses of constants, e.g., Modelica.Constants.pi, in

parameter Real x=Modelica.Constants.pi;

will have been evaluated (to 3.141592653589793) when declared in the flattened model. This is also true for parameters and variables which are necessary to evaluate (for example parameters used as array sizes) or determined to be equivalent to constants (for example a final independent parameter).

transformed.html

he transformed model, presented in transformed.html, has the same structure as the flattened model. The numbers in Model after transformation corresponds to this stage of the compilation process. In the transformed model alias variables are removed, temporary variables are introduced and some other symbolic transformations are performed. Furthermore, extra initial equations may have been introduced based on, e.g., start attributes set on variables.

alias.html

In alias.html alias variables are listed set by set. Each set is enclosed within curly brackets and the first variable in the list is the variable name used in the transformed model.

blt.html and initBlt.html

In initBlt.html and blt.html all the equations are sorted in the order in which they are calculated, i.e., by causality. The initialization system is found in initBlt.html and the BLT for the DAE system in blt.html. In case of an interactive FMU, these two systems coincide.

The BLT consists of solved equations, meta equations and different kinds of blocks. For equations below the Solved equation label, the variable on the left hand side is calculated directly through evaluation of the right hand side. Meta equation blocks hold assert statements etc.

Blocks can be linear, non-linear as well as having discrete parts. The block type is documented in the title, for example, Torn system (Block 1) of 1 iteration variables and 3 solved variables. Included in the title is also the name of the block, which in turn is used in the runtime logging. Continuous iteration variables, torn variables and discrete variables are listed in separate columns. So are also the equations corresponding to each of the categories.

When reading the BLT from the interactive FMU perspective, res_i, with i=0,1,2..., corresponds to the residual equations. There is no easy way to detect which variables are the iteration variables of the steady state problem from this view. Nestled blocks will be presented as blocks are presented for segregated FMUs, before the residual equations, since these are to be solved before the residuals can be evaluated.

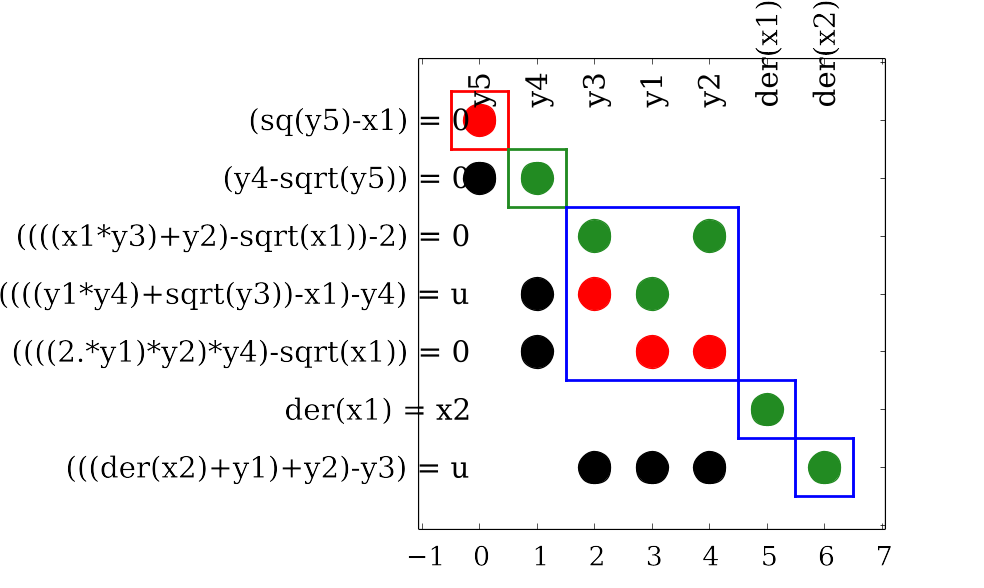

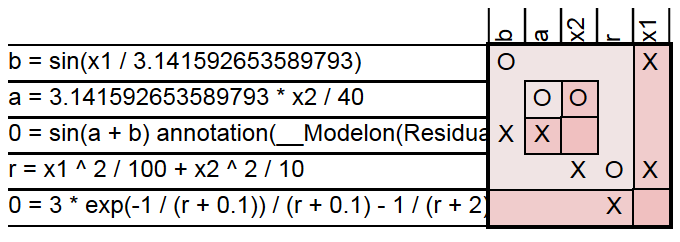

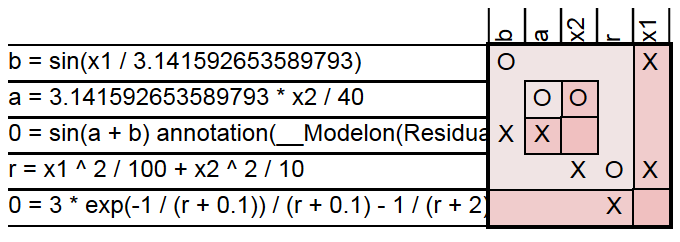

bltTable.html

The relationship between the equations and the variables is presented in bltTable.html. As for the BLT, there exist two tables: one for the initialization system and one for the DAE system. Even for this case, the tables are the same for an interactive FMU.

In the table, the equations are listed in the rows and the variables in the columns. The equation appears in the same form as in transformed.html. There are different colors and symbols in the BLT table. We have

| o | The 'o' means that the variable is analytically solvable from the equation if all the other variables are known. |

| x | The 'x' means that the variable cannot be solved for analytically even if the other variables are known. |

| green | The green color marks a solved equation. |

| pink | A pink block shows algebraic equation blocks |

| dark pink | The dark pink highlights the iteration variables and residual equations of the lighter pink block. |

| orange | The orange color marks discrete equations and variables. |

| blue | The blue color marks an equation block where all equations are unsolved. |

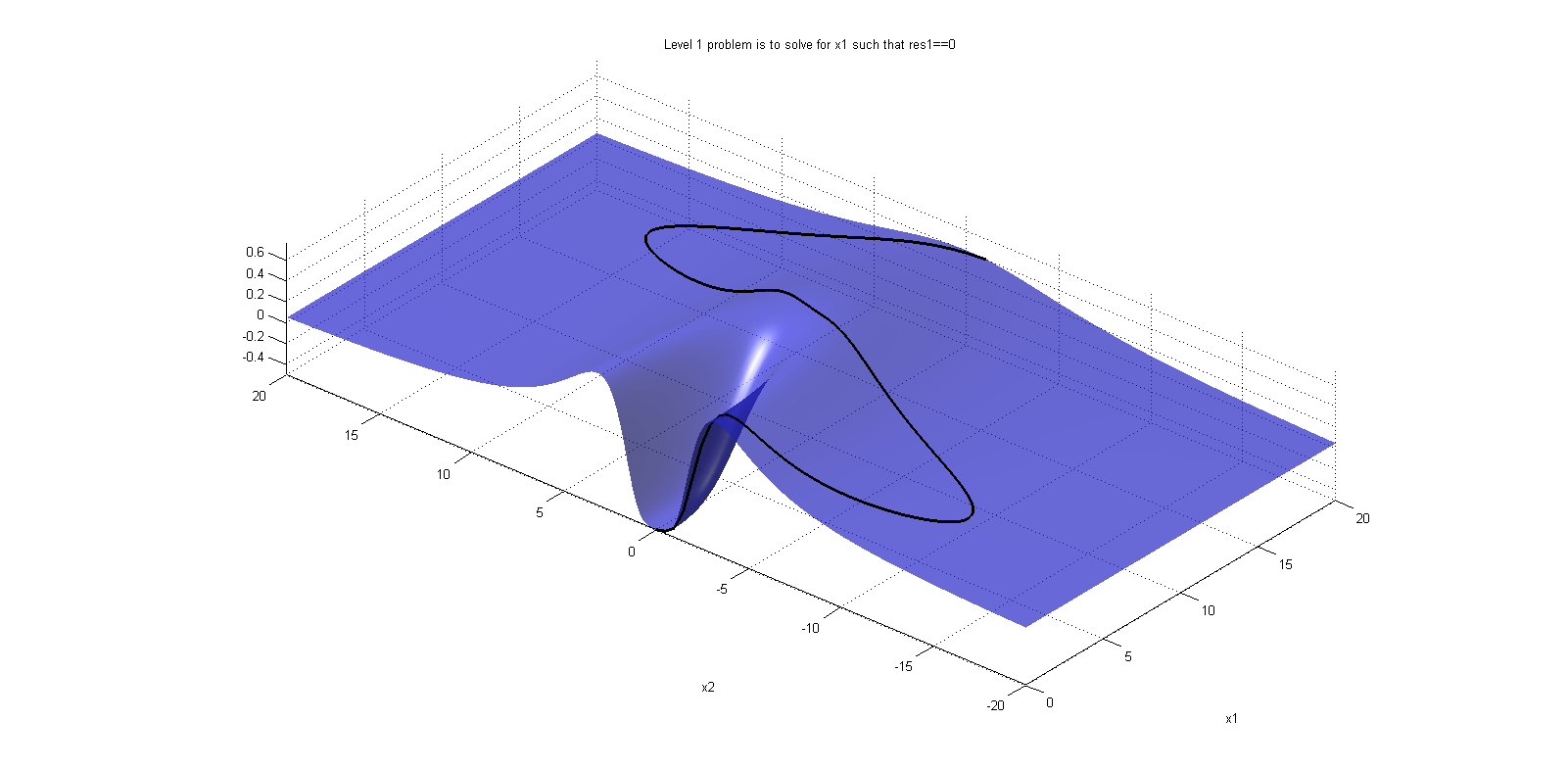

An example of how the BLT table may look like can be found in Figure 4.1. Note that a legned is also generated in the BLT with an explanation of the symbols.

Simulations of FMUs in Python🔗

Introduction🔗

OCT supports simulation of models described in the Modelica language and models following the FMI standard. The simulation environment uses Assimulo as standard which is a standalone Python package for solving ordinary differential and differential algebraic equations. Loading and simulation of FMUs has additionally been made available as a separate Python package, PyFMI.

This chapter describes how to load and simulate FMUs using explanatory examples.

A first example🔗

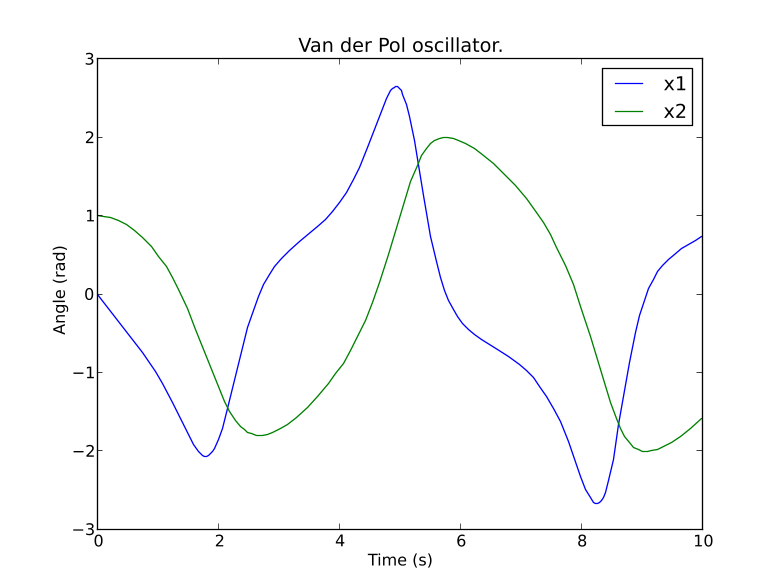

This example focuses on how to use OCT's simulation functionality in the most basic way. The model which is to be simulated is the Van der Pol problem described in the code below. The model is also available from the examples in OCT in the file VDP.mop (located in install/Python/pyjmi/examples/files).

model VDP

// State start values

parameter Real x1_0 = 0;

parameter Real x2_0 = 1;

// The states

Real x1(start = x1_0);

Real x2(start = x2_0);

// The control signal

input Real u;

equation

der(x1) = (1 - x2^2) * x1 - x2 + u;

der(x2) = x1;

end VDP;

Create a new file in your working directory called VDP.mo and save the model.

Next, create a Python script file and write (or copy paste) the commands for compiling and loading a model:

# Import the function for compilation of models and the load_fmu method

from pymodelica import compile_fmu

from pyfmi import load_fmu

# Import the plotting library

import matplotlib.pyplot as plt

# Compile model

fmu_name = compile_fmu("VDP","VDP.mo")

# Load model

vdp = load_fmu(fmu_name)

The function compile_fmu compiles the model into a binary, which is then loaded when the vdp object is created. This object represents the compiled model, an FMU, and is used to invoke the simulation algorithm (for more information about model compilation and options, see Working with Models in Python:

res = vdp.simulate(final_time=10)

x1 = res['x1']

x2 = res['x2']

t = res['time']

plt.figure(1)

plt.plot(t, x1, t, x2)

plt.legend(('x1','x2'))

plt.title('Van der Pol oscillator.')

plt.ylabel('Angle (rad)')

plt.xlabel('Time (s)')

plt.show()

Simulation of Models🔗

Simulation of models in OCT is performed via the simulate method of a model object. The FMU model objects in OCT are located in PyFMI:

• FMUModelME1 / FMUModelME2

• FMUModelCS1 / FMUModelCS2

FMUModelME * / FMUModelCS * also supports compiled models from other simulation/modelling tools that follow the FMI standard (extension .fmu) (either Model exchange FMUs or Co-Simulation FMUs). Both FMI version 1.0 and FMI version 2.0 are supported. For more information about compiling a model in OCT see Working with Models in Python.

The simulation method is the preferred method for simulation of models and which by default is connected to the Assimulo simulation package but can also be connected to other simulation platforms. The simulation method for FMUModelME * / FMUModelCS * is defined as:

class FMUModel(ME/CS)(...)

...

def simulate(self,

start_time=0.0,

final_time=1.0,

input=(),

algorithm='AssimuloFMIAlg',

options={}):

And used in the following way:

res = FMUModel(ME/CS)*.simulate() # Using default values

For FMUModelCS *, the FMU contains the solver and is thus used (although using the same interface)

Convenience method, load_fmu🔗

Since there are different FMI specifications for Model exchange and Co-Simulation and also differences between versions, a convenience method, load_fmu has been created. This method is the preferred access point for loading an FMU and will return an instance of the appropriate underlying FMUModel(CS/ME) * class.

Arguments🔗

The start and final time attributes are simply the time where the solver should start the integration and stop the integration. The input however is a bit more complex and is described in more detail in the following section. The algorithm attribute is where the different simulation package can be specified, however currently only a connection to Assimulo is supported and connected through the algorithm AssimuloFMIAlg for FMUModelME *.

Inputs🔗

The input argument defines the input trajectories to the model and should be a 2-tuple consisting of the names of the input variables and their trajectories. The names can be either a list of strings, or a single string for setting only a single input trajectory. The trajectories can be given as either a data matrix or a function. If a data matrix is used, it should contain a time vector as the first column, and then one column for each input, in the order of the list of names. If instead the second argument is a function it should be defined to take the time as input and return an array with the values of the inputs, in the order of the list of names.

For example, consider that we have a model with an input variable u1 and that the model should be driven by a sine wave as input. We are interested in the interval 0 to 10. We will look at both using a data matrix and at using a function.

import numpy as N

t = N.linspace(0.,10.,100) # Create one hundred evenly spaced points

u = N.sin(t) # Create the input vector

u_traj = N.transpose(N.vstack((t,u))) # Create the data matrix and transpose

# it to the correct form

input_object = ('u1', u_traj)

Now we are ready to simulate using the input and simulate 10 seconds.

res = model.simulate(final_time=10, input=input_object)

import numpy as N

t = N.linspace(0.,10.,100) # Create one hundred evenly spaced points

u1 = N.sin(t) # Create the first input vector

u2 = N.cos(t) # Create the second input vector

u_traj = N.transpose(N.vstack((t,u1,u2))) # Create the data matrix and

# transpose it to the correct form

input_object = (['u1','u2'], u_traj)

res = model.simulate(final_time=10, input=input_object)

Options for Model Exchange FMUs🔗

The options attribute are where options to the specified algorithm are stored and are preferably used together with:

opts = FMUModelME*.simulate_options()

>>> help(opts)

Options for the solving the FMU using the Assimulo simulation package.

Currently, the only solver in the Assimulo package that fully supports

simulation of FMUs is the solver CVode.

...

In Table 1 the general options for the AssimuloFMIAlg algorithm are described while in Table 2 a selection of the different solver arguments for the ODE solver CVode is shown. More information regarding the solver options can be found here, www.jmodelica.org/assimulo.

| Options | Default | Description |

|---|---|---|

| solver | "CVode" | Specifies the simulation method that is to be used. Currently supported solvers are, CVode, Radau5ODE, RungeKutta34, Dopri5, RodasODE, LSODAR, ExplicitEuler. The recommended solver is "CVode". |

| ncp | 500 | Number of communication points. If ncp is zero, the solver will return the internal steps taken. |

| initialize | True | If set to True, the initializing algorithm defined in the FMU model is invoked, otherwise it is assumed the user have manually invoked model.initialize() |

| write_scaled_result | False | When true, write the result to file without taking numerical scaling into account. |

| result_file_name | Empty string (default generated file name will be used) | Specifies the name of the file where the simulation result is written. Setting this option to an empty string results in a default file name that is based on the name of the model class. |

| filter | None | A filter for choosing which variables to actually store result for. The syntax can be found here. An example is filter = "*der" , store all variables ending with 'der' and filter = ["*der*", "summary*"], store all variables with "der" in the name and all variables starting with "summary". |

| result_handling | "file" | Specifies how the result should be handled. Either stored to file or stored in memory. One can also use a custom handler. Available options: "file", "memory", "custom" |

Lets look at an example, consider that you want to simulate an FMU model using the solver CVode together with changing the discretization method (discr) from BDF to Adams:

opts = model.simulate_options() # Retrieve the default options

#opts['solver'] = 'CVode' # Not necessary, default solver is CVode

opts['CVode_options']['discr'] = 'Adams' # Change from using BDF to Adams

opts['initialize'] = False # Don't initialize the model

model.simulate(options=opts) # Pass in the options to simulate and simulate

opts['CVode_options']['atol'] = 1.0e-6 # Options specific for CVode

opts['solver'] = 'CVode' # Changing the solver

opts['ncp'] = 1000 # Changing the number of communication points.

| Option | Default | Description |

|---|---|---|

| disc | 'BDF' | Discretization method. Can be either 'BDF' or 'Adams'. |

| iter | 'Newton' | The iteration method. Can be either 'Newton' or 'FixedPoint'. |

| maxord | 5 | The maximum order used. Maximum for 'BDF' is 5 while for the 'Adams' method the maximum is 12. |

| minh | 0.0 | Minimal step-size. |

| maxh | Inf | Maximum step-size. |

| rtol | 1e-4 | Relative tolerance. The relative tolerance is retrieved from the 'default experiment' section in the XMLfile and if not found is set to 1.0e-4. |

| atol | rtol*0.01*(nominal values of the continuous states) | Absolute Tolerance. Can be an array where each value corresponds to the absolute tolerance for the corresponding variable. Can also be a single value. |

Options for Co-Simulation FMUs🔗

The options attribute are where options to the specified algorithm are stored, and are preferably used together with:

opts = FMUModelCS*.simulate_options()

>>> help(opts)

Options for the solving the CS FMU.

...

In Table 3 the general options for the FMICSAlg algorithm are described.

| Options | Default | Description |

|---|---|---|

| ncp | 500 | Number of communication points. If ncp is zero, the solver will return the internal steps taken. |

| initialize | True | If set to True, the initializing algorithm defined in the FMU model is invoked, otherwise it is assumed the user have manually invoked model.initialize() |

| write_scaled_result | False | When true, write the result to file without taking numerical scaling into account. |

| result_file_name | Empty string (default generated file name will be used) | Specifies the name of the file where the simulation result is written. Setting this option to an empty string results in a default file name that is based on the name of the model class. |

| filter | None | A filter for choosing which variables to actually store result for. The syntax can be found here. An example is filter = "*der" , store all variables ending with 'der' and filter = ["*der*", "summary*"], store all variables with "der" in the name and all variables starting with "summary". |

| result_handling | "file" | Specifies how the result should be handled. Either stored to file or stored in memory. One can also use a custom handler. Available options: "file", "memory", "custom" |

Return argument🔗

The return argument from the simulate method is an object derived from a common result object ResultBase in algorithm_drivers.py with a few extra convenience methods for retrieving the result of a variable. The result object can be accessed in the same way as a dictionary type in Python with the name of the variable as key.

res = model.simulate()

y = res['y'] # Return the result for the variable/parameter/constant y

dery = res['der(y)'] # Return the result for the variable/parameter/constant der(y)

| Options | Default | Description |

|---|---|---|

| options | Property | Gets the options object that was used during the simulation. |

| solver | Property | Gets the solver that was used during the integration. |

| result_file | Property | Gets the name of the generated result file. |

| is_variable(name) | Method | Returns True if the given name is a time-varying variable. |

| data_matrix | Property | Gets the raw data matrix. |

| is_negated(name) | Method | Returns True if the given name is negated in the result matrix. |

| get_column(name) | Method | Returns the column number in the data matrix which corresponds to the given variable. |

Examples🔗

In the next sections, it will be shown how to use the OCT platform for simulation of various FMUs. The Python commands in these examples may be copied and pasted directly into a Python shell, in some cases with minor modifications. Alternatively, they may be copied into a file, which also is the recommended way.

Simulation of a high-index model🔗

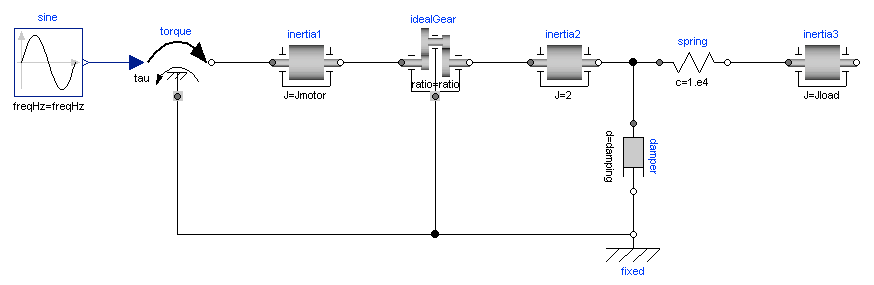

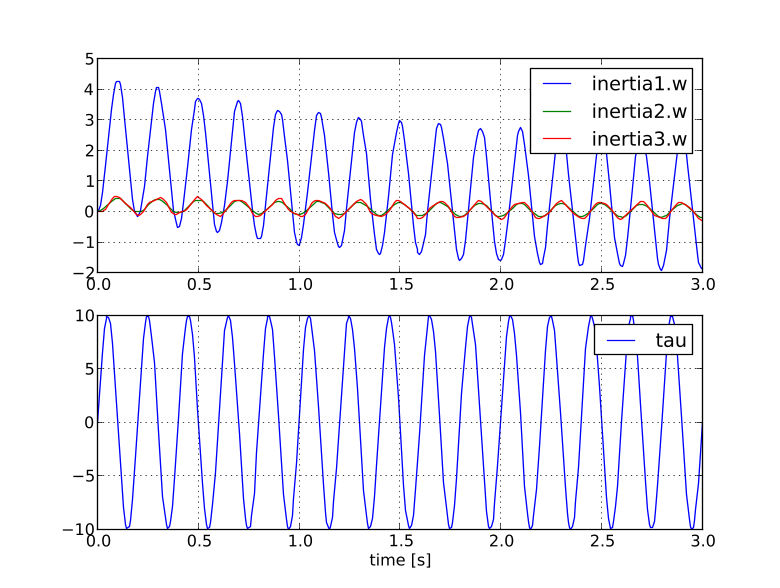

Mechanical component-based models often result in high-index DAEs. In order to efficiently integrate such models, Modelica tools typically employs an index reduction scheme, where some equations are differentiated, and dummy derivatives are selected. In order to demonstrate this feature, we consider the model Modelica.Mechanics.Rotational.Examples.First from the Modelica Standard library, see Figure 5.2. The model is of high index since there are two rotating inertias connected with a rigid gear.

First create a Python script file and enter the usual imports:

import matplotlib.pyplot as plt

from pymodelica import compile_fmu

from pyfmi import load_fmu

# Compile model

fmu_name = compile_fmu("Modelica.Mechanics.Rotational.Examples.First")

# Load model

model = load_fmu(fmu_name)

Notice that no file name, just an empty tuple, is provided to the function compile_fmu, since in this case the model that is compiled resides in the Modelica Standard Library. In the compilation process, the index reduction algorithm is invoked. Next, the model is simulated for 3 seconds:

# Load result file

res = model.simulate(final_time=3.)

w1 = res['inertia1.w']

w2 = res['inertia2.w']

w3 = res['inertia3.w']

tau = res['torque.tau']

t = res['time']

plt.figure(1)

plt.subplot(2,1,1)

plt.plot(t,w1,t,w2,t,w3)

plt.grid(True)

plt.legend(['inertia1.w','inertia2.w','inertia3.w'])

plt.subplot(2,1,2)

plt.plot(t,tau)

plt.grid(True)

plt.legend(['tau'])

plt.xlabel('time [s]')

plt.show()

Simulation and parameter sweeps🔗

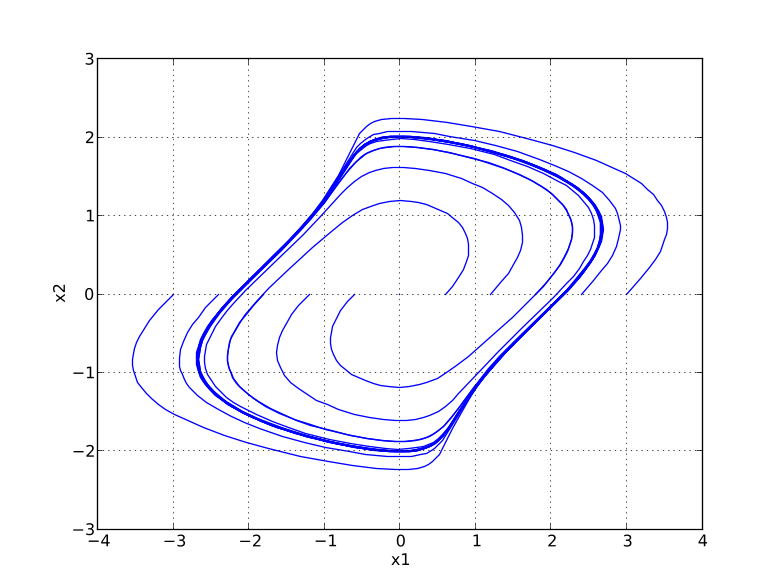

This example demonstrates how to run multiple simulations with different parameter values. Sweeping parameters is a useful technique for analysing model sensitivity with respect to uncertainty in physical parameters or initial conditions. Consider the following model of the Van der Pol oscillator:

model VDP

// State start values

parameter Real x1_0 = 0;

parameter Real x2_0 = 1;

// The states

Real x1(start = x1_0);

Real x2(start = x2_0);

// The control signal

input Real u;

equation

der(x1) = (1 - x2^2) * x1 - x2 + u;

der(x2) = x1;

end VDP;

Notice that the initial values of the states are parametrized by the parameters x1_0 and x2_0. Next, copy the Modelica code above into a file VDP.mo and save it in your working directory. Also, create a Python script file and name it vdp_pp.py. Start by copying the commands:

import numpy as N

import pylab as P

from pymodelica import compile_fmu

from pyfmi import load_fmu

# Define model file name and class name

model_name = 'VDP'

mofile = 'VDP.mo'

# Compile model

fmu_name = compile_fmu(model_name,mofile)

Next, we define the initial conditions for which the parameter sweep will be done. The state x2 starts at 0, whereas the initial condition for x1 is swept between -3 and 3:

# Define initial conditions

N_points = 11

x1_0 = N.linspace(-3.,3.,N_points)

x2_0 = N.zeros(N_points)

fig = P.figure()

P.clf()

P.xlabel('x1')

P.ylabel('x2')

for i in range(N_points):

# Load model

vdp = load_fmu(fmu_name)

# Set initial conditions in model

vdp.set('x1_0',x1_0[i])

vdp.set('x2_0',x2_0[i])

# Simulate

res = vdp.simulate(final_time=20)

# Get simulation result

x1=res['x1']

x2=res['x2']

# Plot simulation result in phase plane plot

P.plot(x1, x2,'b')

P.grid()

P.show()

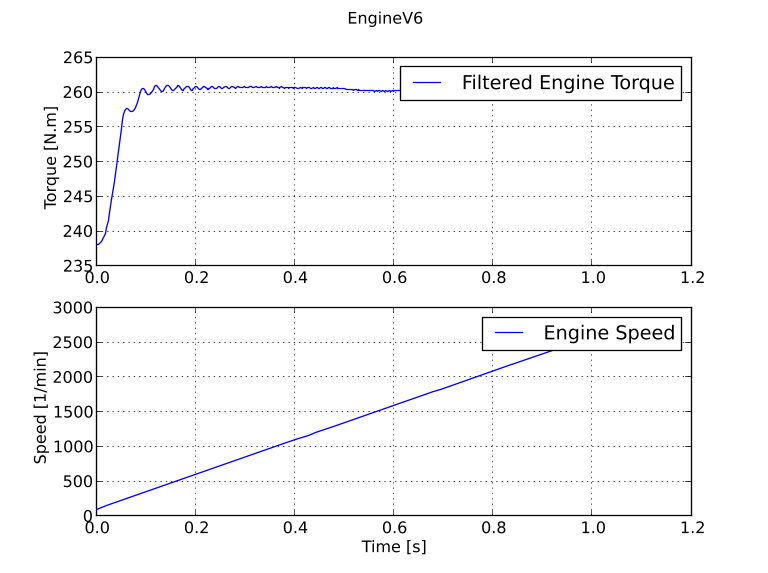

Simulation of an Engine model with inputs🔗

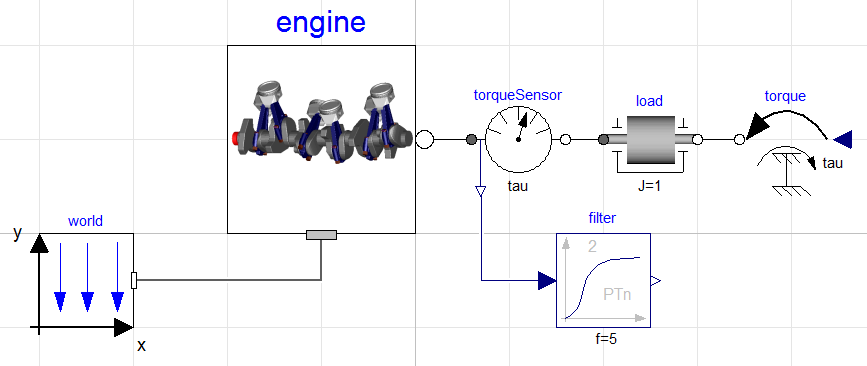

In this example, the model is larger than the previous one. It is a slightly modified version of the model EngineV6_analytic from the Multibody library in the Modelica Standard Library. The modification consists of a replaced load with a user-defined load. This has been done to be able to demonstrate how inputs are set from a Python script. In Figure 5.5 the model is shown.

The Modelica code for the model is shown below, copy and save the code in a file named EngineV6.mo

model EngineV6_analytic_with_input

output Real engineSpeed_rpm= Modelica.Units.SI.Conversions.to_rpm(load.w);

output Real engineTorque = filter.u;

output Real filteredEngineTorque = filter.y;

input Real u;

import Modelica.Mechanics.*;

inner MultiBody.World world;

MultiBody.Examples.Loops.Utilities.EngineV6_analytic engine(redeclare

model Cylinder = MultiBody.Examples.Loops.Utilities.Cylinder_analytic_CAD);

Rotational.Components.Inertia load(

phi(start=0,fixed=true), w(start=10,fixed=true),

stateSelect=StateSelect.always,J=1);

Rotational.Sensors.TorqueSensor torqueSensor;

Rotational.Sources.Torque torque;

Modelica.Blocks.Continuous.CriticalDamping filter(

n=2,initType=Modelica.Blocks.Types.Init.SteadyState,f=5);

equation

torque.tau = u;

connect(world.frame_b, engine.frame_a);

connect(torque.flange, load.flange_b);

connect(torqueSensor.flange_a, engine.flange_b);

connect(torqueSensor.flange_b, load.flange_a);

connect(torqueSensor.tau, filter.u);

annotation (experiment(StopTime=1.01));

end EngineV6_analytic_with_input;

Now that the model has been defined, we create our Python script which will compile, simulate and visualize the result for us. Create a new text-file and start by copying the below commands into the file. The code will import the necessary methods and packages into Python.

from pymodelica import compile_fmu

from pyfmi import load_fmu

import pylab as P

Compiling the model is performed by invoking the compile_fmu method where the first argument is the name of the model and the second argument is where the model is located (which file). The method will create an FMU in the current directory and to simulate the FMU, we need to additionally load the created FMU into Python. This is done with the load_fmu method which takes the name of the FMU as input.

name = compile_fmu("EngineV6_analytic_with_input", "EngineV6.mo")

model = load_fmu(name)

opts = model.simulate_options()

opts["ncp"] = 1000 #Specify that 1000 output points should be returned

A simulation is finally performed using the simulate method on the model and as we have changed the options, we need to additionally provide these options to the simulate method.

P.plot(res["time"],res["filteredEngineTorque"], label="Filtered Engine Torque")

P.show()

In Figure 5.6 the trajectories are shown for he engine torque and the engine speed utilizing subplots from Matplotlib.

Above we have simulated the engine model and looked at the result, we have not however specified any load as input. Remember that the model we are looking at has a user-specified load. Now we will create a Python function that will act as our input. We create a function that depends on the time and returns the value for use as input.

def input_func(t):

return -100.0*t

In order to use this input in the simulation, simply provide the name of the input variable and the function as the input argument to the simulate method, see below.

res = model.simulate(options=opts, input=("u",input_func))

Large models contain an enormous amount of variables and by default, all of these variables are stored in the result. Storing the result takes time and for large models, the saving of the result may be responsible for the majority of the overall simulation time. Not all variables may be of interest, for example in our case, we are only interested in two variables so storing the other variables are not necessary. In the options dictionary there is a filter option which allows specifying which variables should be stored, so in our case, try the below filter and look at the impact on the simulation time.

opts["filter"] = ["filteredEngineTorque", "engineSpeed_rpm"]

Simulation using the native FMI interface🔗

This example shows how to use the native OCT FMI interface for the simulation of an FMU of version 2.0 for Model Exchange. For the procedure with version 1.0, refer to Functional Mock-up Interface for Model Exchange version 1.0.

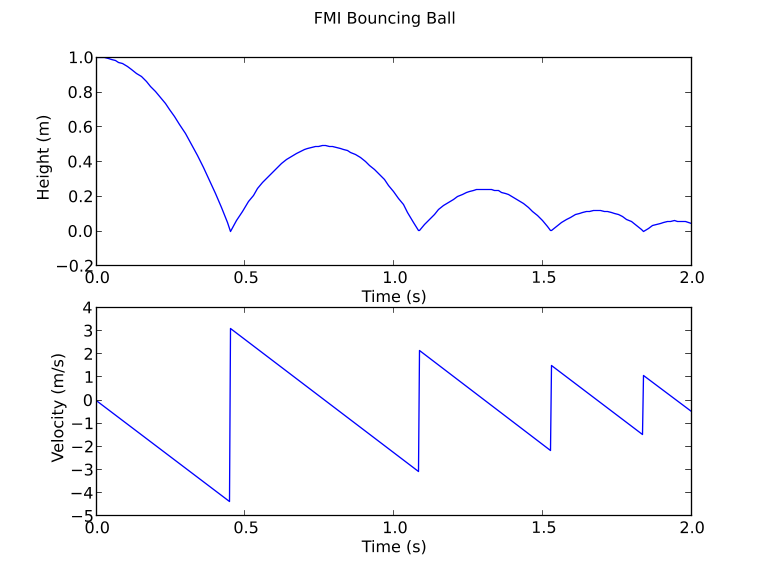

The FMU that is to be simulated is the bouncing ball example from Qtronics FMU SDK . This example is written similarly to the example in the documentation of the 'Functional Mockup Interface for Model Exchange' version 2.0 https://www.fmi-standard.org/ The bouncing ball model is to be simulated using the explicit Euler method with event detection.

The example can also be found in the Python examples catalog in the OCT platform. There you can also find a similar example for simulation with a version 1.0 Model Exchange FMU.

The bouncing ball consists of two equations,

and one event function (also commonly called root function),

Where the ball bounces and loses some of its energy according to,

Here, h is the height, g the gravity, v the velocity and e a dimensionless parameter. The starting values are, h=1 and v=0 and for the parameters, e=0.7 and g = 9.81.

Implementation🔗

Start by importing the necessary modules,

import numpy as N

import pylab as P # Used for plotting

from pyfmi.fmi import load_fmu # Used for loading the FMU

Next, the FMU is to be loaded and initialized

# Load the FMU by specifying the fmu together with the path.

bouncing_fmu = load_fmu('/path/to/FMU/bouncingBall.fmu')

Tstart = 0.5 # The start time.

Tend = 3.0 # The final simulation time.

# Initialize the model. Also sets all the start attributes defined in the XML file.

bouncing_fmu.setup_experiment(start_time = Tstart) # Set the start time to Tstart

bouncing_fmu.enter_initialization_mode()

bouncing_fmu.exit_initialization_mode()

he first line loads the FMU and connects the C-functions of the model to Python together with loading the information from the XML-file. The start time also needs to be specified by providing the argument start_time to setup_experiment. The model is also initialized, which must be done before the simulation is started.

Note that if the start time is not specified, FMUModelME2 tries to find the starting time in the XML-file structure 'default experiment' and if successful starts the simulation from that time. Also if the XML-file does not contain any information about the default experiment the simulation is started from time zero.

Next step is to do the event iteration and thereafter enter continuous time mode.

eInfo = bouncing_fmu.get_event_info()

eInfo.newDiscreteStatesNeeded = True

#Event iteration

while eInfo.newDiscreteStatesNeeded == True:

bouncing_fmu.enter_event_mode()

bouncing_fmu.event_update()

eInfo = bouncing_fmu.get_event_info()

bouncing_fmu.enter_continuous_time_mode()

# Get Continuous States

x = bouncing_fmu.continuous_states

# Get the Nominal Values

x_nominal = bouncing_fmu.nominal_continuous_states

# Get the Event Indicators

event_ind = bouncing_fmu.get_event_indicators()

# Values for the solution

# Retrieve the valureferences for the values 'h' and 'v'

vref = [bouncing_fmu.get_variable_valueref('h')] + \

[bouncing_fmu.get_variable_valueref('v')]

t_sol = [Tstart]

sol = [bouncing_fmu.get_real(vref)]

Here the continuous states together with the nominal values and the event indicators are stored to be used in the integration loop. In our case, the nominal values are all equal to one. This information is available in the XML-file. We also create lists that are used for storing the result. The final step before the integration is started is to define the step-size.

time = Tstart

Tnext = Tend # Used for time events

dt = 0.01 # Step-size

# Main integration loop.

while time < Tend and not bouncing_fmu.get_event_info().terminateSimulation:

#Compute the derivative of the previous step f(x(n), t(n))

dx = bouncing_fmu.get_derivatives()

# Advance

h = min(dt, Tnext-time)

time = time + h

# Set the time

bouncing_fmu.time = time

# Set the inputs at the current time (if any)

# bouncing_fmu.set_real,set_integer,set_boolean,set_string (valueref, values)

# Set the states at t = time (Perform the step using x(n+1)=x(n)+hf(x(n), t(n))

x = x + h*dx

bouncing_fmu.continuous_states = x

This is the integration loop for advancing the solution one step. The loop continues until the final time has been reached or if the FMU reported that the simulation is to be terminated. At the start of the loop, the derivatives of the continuous states are retrieved and then the simulation time is incremented by the step-size and set to the model. It could also be the case that the model depends on inputs that can be set using the set_(real/...) methods.

Note that only variables defined in the XML-file to be inputs can be set using the set_(real/...) methods according to the FMI specification.

The step is performed by calculating the new states (x+h*dx) and setting the values into the model. As our model, the bouncing ball also consists of event functions that needs to be monitored during the simulation, we have to check the indicators which are done below.

# Get the event indicators at t = time

event_ind_new = bouncing_fmu.get_event_indicators()

# Inform the model about an accepted step and check for step events

step_event = bouncing_fmu.completed_integrator_step()

# Check for time and state events

time_event = abs(time-Tnext) <= 1.e-10

state_event = True if True in ((event_ind_new>0.0) != (event_ind>0.0)) else False

# Event handling

if step_event or time_event or state_event:

bouncing_fmu.enter_event_mode()

eInfo = bouncing_fmu.get_event_info()

eInfo.newDiscreteStatesNeeded = True

# Event iteration

while eInfo.newDiscreteStatesNeeded:

bouncing_fmu.event_update('0') # Stops at each event iteration

eInfo = bouncing_fmu.get_event_info()

# Retrieve solutions (if needed)

if eInfo.newDiscreteStatesNeeded:

# bouncing_fmu.get_real,get_integer,get_boolean,get_string(valueref)

pass

# Check if the event affected the state values and if so sets them

if eInfo.valuesOfContinuousStatesChanged:

x = bouncing_fmu.continuous_states

# Get new nominal values.

if eInfo.nominalsOfContinuousStatesChanged:

atol = 0.01*rtol*bouncing_fmu.nominal_continuous_states

# Check for new time event

if eInfo.nextEventTimeDefined:

Tnext = min(eInfo.nextEventTime, Tend)

else:

Tnext = Tend

bouncing_fmu.enter_continuous_time_mode()

If an event occurred, we enter the iteration loop and the event mode where we loop until the solution of the new states has converged. During this iteration, we can also retrieve the intermediate values with the normal get methods. At this point, eInfo contains information about the changes made in the iteration. If the state values have changed, they are retrieved. If the state references have changed, meaning that the state variables no longer have the same meaning as before by pointing to another set of continuous variables in the model, for example in the case of dynamic state selection, new absolute tolerances are calculated with the new nominal values. Finally, the model is checked for a new time event and the continuous time mode is entered again.

event_ind = event_ind_new

# Retrieve solutions at t=time for outputs

# bouncing_fmu.get_real,get_integer,get_boolean,get_string (valueref)

t_sol += [time]

sol += [bouncing_fmu.get_real(vref)]

After the loop has finished, by reaching the final time, we plot the simulation results

# Plot the height

P.figure(1)

P.plot(t_sol,N.array(sol)[:,0])

P.title(bouncing_fmu.get_name())

P.ylabel('Height (m)')

P.xlabel('Time (s)')

# Plot the velocity

P.figure(2)

P.plot(t_sol,N.array(sol)[:,1])

P.title(bouncing_fmu.get_name())

P.ylabel('Velocity (m/s)')

P.xlabel('Time (s)')

P.show()

Simulation of Co-Simulation FMUs🔗

Simulation of a Co-Simulation FMU follows the same workflow as the simulation of a Model Exchange FMU. The model we would like to simulate is a model of a bouncing ball, the file bouncingBall.fmu is located in the examples folder in the OCT installation, pyfmi/examples/files/CS1.0/ for version 1.0 and pyfmi/examples/files/ CS2.0/ for version 2.0. The FMU is a Co-simulation FMU and to simulate it, we start by importing the necessary methods and packages into Python:

import pylab as P # For plotting

from pyfmi import load_fmu # For loading the FMU

model = load_fmu('bouncingBall.fmu')

The model object can now be used to interact with the FMU, setting and getting values for instance. A simulation is performed by invoking the simulate method:

res = model.simulate(final_time=2.)

# Retrieve the result for the variables

h_res = res['h']

_res = res['v']

t = res['time']

# Plot the solution

# Plot the height

fig = P.figure()

P.clf()

P.subplot(2,1,1)

P.plot(t, h_res)

P.ylabel('Height (m)')

P.xlabel('Time (s)')

# Plot the velocity

P.subplot(2,1,2)

P.plot(t, v_res)

P.ylabel('Velocity (m/s)')

P.xlabel('Time (s)')

P.suptitle('FMI Bouncing Ball')

P.show()

Cross-platform generation of FMUs🔗

While FMUs are generally specific to the platform they have been compiled on, OCT supports the generation of Windows FMUs on Ubuntu.

In this chapter, we describe how to generate a Windows FMU from Ubuntu (compiled with clang using libraries from Microsoft Visual C (MSVC) 2015). Both the MSVC runtime libraries and the Windows SDK are required.

Prerequisites and setup🔗

This feature requires that clang, version 19, and its cross-compilation toolchain is installed. Installation can be done as follows:

apt-get -y install wget lsb-release curl software-properties-common gnupg

wget https://apt.llvm.org/llvm.sh

chmod +x llvm.sh

./llvm.sh 19 all

Next, one needs to download the necessary MSVC libraries required for compilation. These can be downloaded using the tools and instructions at https://github.com/modelon-community/xwin.

OCT locates the MSVC libraries when compiling a model, by checking in the following order:

- The path specified in the compiler option target_platform_packages_directory.

- The environment variable OCT_CROSS_COMPILE_MSVC_DIR.

- The default location \<OCT_Install_folder>/lib/win64.

Modelica libraries with external code require compiled binaries compatible with the target platform, Windows, and that the external code is compiled using the Microsoft Visual C (MSVC) 2015 compiler. Note that libraries compiled with MSVC versions newer than 2015 could be compatible, since newer versions of MSVC strive to maintain backwards compatibility with older versions. But there is no guarantee that they are. Furthermore, the external code needs to be additionally compiled to work on the native platform, Ubuntu.

Limitations🔗

The following limitations apply to the cross-platform compilation of FMUs:

- OCT only supports cross-platform generation to Windows 64bit.

Example using the Python API🔗

The following example demonstrates how to compile a Windows FMU on Ubuntu, using the OCT Python API:

import os

from pymodelica import compile_fmu

msvc_dir_path = os.path.join('path', 'to', 'your', 'MSVC2015', 'libraries')

compiler_options = {'target_platform_packages_directory' : msvc_dir_path}

model = 'Modelica.Mechanics.Rotational.Examples.CoupledClutches'

fmu = compile_fmu(model, platform = 'win64', compiler_options = compiler_options)

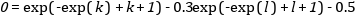

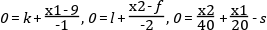

Dynamic Optimization in Python🔗

Introduction🔗

OCT supports the optimization of dynamic and steady state models. Many engineering problems can be cast as optimization problems, including optimal control, minimum time problems, optimal design, and model calibration. These different types of problems will be illustrated and it will be shown how they can be formulated and solved. The chapter starts with an introductory example in A first example and in Solving optimization problems, the details of how the optimization algorithms are invoked are explained. The following sections contain tutorial exercises that illustrate how to set up and solve different kinds of optimization problems.

When formulating optimization problems, models are expressed in the Modelica language, whereas optimization specifications are given in the Optimica extension which is described in Chapter 16 in OPTIMICA Compiler Toolkit User's guide. The tutorial exercises in this chapter assume that the reader is familiar with the basics of Modelica and Optimica.

A first example🔗

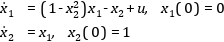

In this section, a simple optimal control problem will be solved. Consider the optimal control problem for the Van der Pol oscillator model:

optimization VDP_Opt (objectiveIntegrand = x1^2 + x2^2 + u^2,

startTime = 0,

finalTime = 20)

// The states

Real x1(start=0,fixed=true);

Real x2(start=1,fixed=true);

// The control signal

input Real u;

equation

der(x1) = (1 - x2^2) * x1 - x2 + u;

der(x2) = x1;

constraint

u<=0.75;

end VDP_Opt;

Create a new file named VDP_Opt.mop and save it in your working directory. Notice that this model contains both the dynamic system to be optimized and the optimization specification. This is possible since Optimica is an extension of Modelica and thereby supports also Modelica constructs such as variable declarations and equations. In most cases, however, Modelica models are stored separately from the Optimica specifications.

Next, create a Python script file and write (or copy-paste) the following commands:

# Import the function for transferring a model to CasADiInterface

from pyjmi import transfer_optimization_problem

# Import the plotting library

import matplotlib.pyplot as plt

Next, we transfer the model:

# Transfer the optimization problem to casadi

op = transfer_optimization_problem("VDP_Opt", "VDP_Opt.mop")

The function transfer_optimization_problem transfers the optimization problem into Python and expresses it's variables, equations, etc., using the automatic differentiation tool CasADi. This object represents the compiled model and is used to invoke the optimization algorithm:

res = op.optimize()

# Extract variable profiles

x1=res['x1']

x2=res['x2']

u=res['u']

t=res['time']

plt.figure(1)

plt.clf()

plt.subplot(311)

plt.plot(t,x1)

plt.grid()

plt.ylabel('x1')

plt.subplot(312)

plt.plot(t,x2)

plt.grid()

plt.ylabel('x2')

plt.subplot(313)

plt.plot(t,u)

plt.grid()

plt.ylabel('u')

plt.xlabel('time')

plt.show()

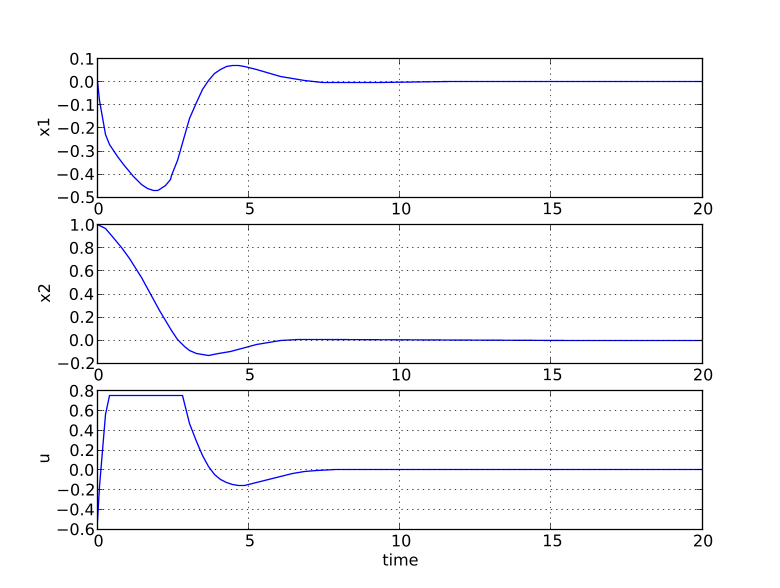

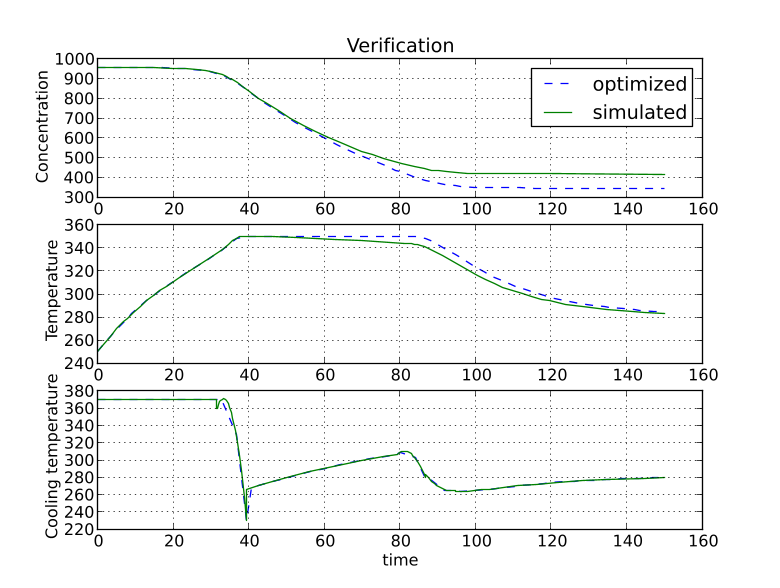

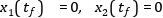

You should now see the optimization result as shown in Figure 6.1.

Solving optimization problems🔗

The first step when solving an optimization problem is to formulate a model and an optimization specification and then compile the model as described in the following sections in this chapter. There are currently two different optimization algorithms available in OCT, which are suitable for different classes of optimization problems.

• Dynamic optimization of DAEs using direct collocation with CasADi. This algorithm is the default algorithm for solving optimal control and parameter estimation problems. It is implemented in Python, uses CasADi for computing function derivatives and the nonlinear programming solver IPOPT for solving the resulting NLP. Use this method if your model is a DAE and does not contain discontinuities.

• Derivative free calibration and optimization of ODEs with FMUs. This algorithm solves parameter optimization and model calibration problems and is based on FMUs. The algorithm is implemented in Python and relies on a Nelder-Mead derivative free optimization algorithm. Use this method if your model is of large scale and has a modest number of parameters to calibrate and/or contains discontinuities or hybrid elements. Note that this algorithm is applicable to models which have been exported as FMUs also by other tools than OCT.

To illustrate how to solve optimization problems the Van der Pol problem presented above is used. First, the model is transferred into Python

op = transfer_optimization_problem("VDP_pack.VDP_Opt2", "VDP_Opt.mop")

All operations that can be performed on the model are available as methods of the op object and can be accessed by tab completion. Invoking an optimization algorithm is done by calling the method OptimizationProblem.optimize, which performs the following tasks:

• Sets up the selected algorithm with default or user-defined options

• Invokes the algorithm to find a numerical solution to the problem

• Writes the result to a file

• Returns a result object from which the solution can be retrieved

The interactive help for the optimize method is displayed by the command:

>>> help(op.optimize)

Solve an optimization problem.

Parameters::

algorithm --

The algorithm which will be used for the optimization is

specified by passing the algorithm class name as string or

class object in this argument. 'algorithm' can be any

class which implements the abstract class AlgorithmBase

(found in algorithm_drivers.py). In this way it is

possible to write custom algorithms and to use them with this

function.

The following algorithms are available:

- 'LocalDAECollocationAlg'. This algorithm is based on

direct collocation on finite elements and the algorithm IPOPT

is used to obtain a numerical solution to the problem.

Default: 'LocalDAECollocationAlg'

options --

The options that should be used in the algorithm. The options

documentation can be retrieved from an options object:

>>> myModel = OptimizationProblem(...)

>>> opts = myModel.optimize_options()

>>> opts?

Valid values are:

- A dict that overrides some or all of the algorithm's default values.

An empty dict will thus give all options with default values.

- An Options object for the corresponding algorithm, e.g.

LocalDAECollocationAlgOptions for LocalDAECollocationAlg.

Default: Empty dict

Returns::

A result object, subclass of algorithm_drivers.ResultBase.

res = vdp.optimize()

In the remainder of this Chapter the available algorithms are described in detail. Options for an algorithm can be set using the options argument to the optimize method. It is convenient to first obtain an options object in order to access the documentation and default option values. This is done by invoking the method optimize_options:

>>> help(op.optimize_options)

Returns an instance of the optimize options class containing options

default values. If called without argument then the options class for

the default optimization algorithm will be returned.

Parameters::

algorithm --

The algorithm for which the options class should be returned.

Possible values are: 'LocalDAECollocationAlg'.

Default: 'LocalDAECollocationAlg'

Returns::

Options class for the algorithm specified with default values.

The options object is essentially a Python dictionary and options are set simply by using standard dictionary syntax:

opts = vdp.optimize_options()

opts['n_e'] = 5

The optimization algorithm may then be invoked again with the new options:

res = vdp.optimize(options=opts)

The optimize method returns a result object containing the optimization result and some meta-information about the solution. The most common operation is to retrieve variable trajectories from the result object:

time = res['time']

x1 = res['x1']

Variable data is returned as NumPy arrays. The result object also contains references to the model that was optimized, the name of the result file that was written to disk, a solver object representing the optimization algorithm and an options object that was used when solving the optimization problem.

Scaling🔗

Many physical models contain variables with values that differ by several orders of magnitude. A typical example is thermodynamic models containing pressures, temperatures and mass flows. Such large differences in scales may have a severe deteriorating effect on the performance of numerical algorithms, and may in some cases even lead to the algorithm failing. In order to relieve the user from the burden of manually scaling variables, Modelica offers the nominal attribute, which can be used to automatically scale a model. Consider the Modelica variable declaration:

Real pressure(start=101.3e3, nominal=1e5);

Here, the nominal attribute is used to specify that the variable pressure takes on values that are on the order of 1e5. In order to use nominal attributes for scaling with CasADi-based algorithms, scaling is enabled by setting the algorithm option variable_scaling to True, and is enabled by default. When scaling is enabled, all variables with a set nominal attribute are then scaled by dividing the variable value by its nominal value, i.e., from an algorithm point of view, all variables should take on values close to one. Notice that variables typically vary during a simulation or optimization and that it is therefore not possible to obtain perfect scaling. In order to ensure that model equations are fulfilled, each occurrence of a variable is multiplied by its nominal value in equations. For example, the equation:

T = f(p)

T_scaled*T_nom = f(p_scaled*p_nom)

when variable scaling is enabled.

The algorithm in Dynamic optimization of DAEs using direct collocation with CasADi also has support for providing trajectories (obtained by for example simulation) that are used for scaling. This means that it usually is not necessary to provide nominal values for all variables and that it is possible to use time-varying scaling factors.

For debugging purposes, it is sometimes useful to write a simulation/optimization/initialization result to file in scaled format, in order to detect if there are some variables that require additional scaling. The option write_scaled_result has been introduced as an option to initialize, simulate and optimize methods for this purpose.

Dynamic optimization of DAEs using direct collocation with CasADi🔗

Algorithm overview🔗

The direct collocation method described in this section can be used to solve dynamic optimization problems, including optimal control problems and parameter optimization problems. In the collocation method, the dynamic model variable profiles are approximated by piecewise polynomials. This method of approximating a differential equation corresponds to a fixed step implicit Runge-Kutta scheme, where the mesh defines the length of each step. Also, the number of collocation points in each element, or step, needs to be provided. This number corresponds to the stage order of the Runge-Kutta scheme. The selection of mesh is analogous to the choice of step length in a one-step algorithm for solving differential equations. Accordingly, the mesh needs to be fine-grained enough to ensure a sufficiently accurate approximation of the differential constraint. The nonlinear programming (NLP) solver IPOPT is used to solve the nonlinear program resulting from collocation. The needed first- and second-order derivatives are obtained using CasADi by algorithmic differentiation. For more details on the inner workings of the algorithm, see [Mag2015] and Chapter 3 in [Mag2016].

The NLP solver requires that the model equations are twice continuously differentiable with respect to all of the variables. This for example means that the model can not contain integer variables or if clauses depending on the states.

Optimization models are represented using the class OptimizationProblem, which can be instantiated using the transfer_optimization_problem method. An object containing all the options for the optimization algorithm can be retrieved from the object:

from pyjmi import transfer_optimization_problem

op = transfer_optimization_problem(class_name, optimica_file_path)

opts = op.optimize_options()

opts? # View the help text

res = op.optimize(options=opts)

The standard options for the algorithm are shown in Table 5. Additional documentation is available in the Python class documentation. The algorithm also has a lot of experimental options, which are not as well tested and some are intended for debugging purposes. These are shown in Table 6, and caution is advised when changing their default values.

| Options | Default | Description |

|---|---|---|

| n_e | 50 | Number of finite elements |

| hs | None | Element lengths. Possible values: None, iterable of floats and "free" None: The element lengths are uniformly distributed. iterable of floats: Component i of the iterable specifies the length of element i. The lengths must be normalized in the sense that the sum of all lengths must be equal to 1. "free": The element lengths become optimization variables and are optimized according to the algorithm option free_element_lengths_data. WARNING: The "free" option is very experimental and will not always give desirable results. |

| n_cp | 3 | Number of collocation points in each element. |